- Arrow Intelligent Solutions /

- Resource Library /

- Arrow Intelligent Solutions Blog

Featured Blogs

Staying the Course: How Long-Life Infrastructure Protects Healthcare’s Most Critical Investments

February 2026

Healthcare organizations face pressure to modernize, secure data, and meet compliance, all while managing tight budgets and high patient volumes. Fast-approaching end-of-life (EOL) on infrastructure disrupts systems, requires requalification of workflows, and leads to unexpected costs.

Executives are left asking: How do we invest in technology that keeps pace with innovation, compliance, and cost?

The Problem

Most commercial technology refreshes every 18 to 24 months. Frequent technology refresh creates chaos in healthcare. New devices or drivers mean revalidation, regulatory review, and retraining. For hospitals using imaging, lab, or monitoring devices, these disruptions impact operational efficiency and continuity, and patient care.

It’s not just an IT inconvenience; it’s a business risk. When suppliers unexpectedly change components or firmware, healthcare teams must rebuild compliance documentation, recertify interfaces, and retest software that previously worked.

The financial impact of short lifecycle infrastructure is hidden in operational friction:

- Costly recertification and revalidation

- Procurement delays caused by supply gaps

- Extended downtime in critical clinical systems

- Compromised compliance reporting

The Insight

Long-life infrastructure gives organizations control. Hardware consistency for 5 to 10 years means stable validation cycles, budgets, and innovation plans. With long-lifecycle systems like HP Z platforms, executives can:

- Maintain validated hardware configurations over time

- Simplify compliance management with consistent documentation

- Plan technology refreshes around strategic needs instead of vendor timelines

- Extend depreciation across 5 to 10 years to reduce total cost of ownership

A predictable platform isn’t just reliable; it’s also predictable. It’s financially responsible and operationally resilient.

What Stability Looks Like in Practice

Imagine a hospital that deploys HP OEM compute systems across its imaging network. Each workstation maintains the same validated configuration for years, supported by HP’s proactive end-of-life reporting and long-term parts availability.

When new applications are added, IT teams integrate them confidently, knowing the platform stays compliant and stable. Consistency across sites and departments boosts efficiency, reduces risk, and supports patient care.

The Business Outcome Executives who prioritize lifecycle stability achieve measurable results:

- Lower operational risk through predictable technology behavior

- Stronger financial planning with fewer surprise refresh costs

- Faster adoption of innovation because systems stay validated longer

- Greater confidence with regulators and auditors through continuity of compliance

This is the foundation of sustainable digital transformation in healthcare.

The Decision

Choosing long-lifecycle infrastructure is a strategic investment in reliability, compliance, and agility. It protects capital, preserves certifications, and keeps focus on better outcomes for patients and providers. When healthcare builds on platforms designed to last, organizations stop reacting to hardware change and start driving strategic progress. Learn why over 70% of the world's largest medical device OEMs work with Arrow to advance their medical innovations*

The Memory Market Update That Matters Right Now

February 2026

The memory market has moved into a tighter, more managed environment. This is not a typical cycle in which pricing falls during excess supply and rises when demand returns. The current phase is being shaped by two durable forces: suppliers are actively steering capacity toward higher-value products, and demand is being reallocated toward AI infrastructure that is more memory-intensive than prior compute generations.

This dynamic was underscored at CES, where NVIDIA CEO Jensen Huang1 emphasized that the limiting factor for AI systems is no longer raw compute but memory. As models scale, he highlighted that memory bandwidth, memory capacity, and data movement are becoming fundamental constraints on performance and efficiency.

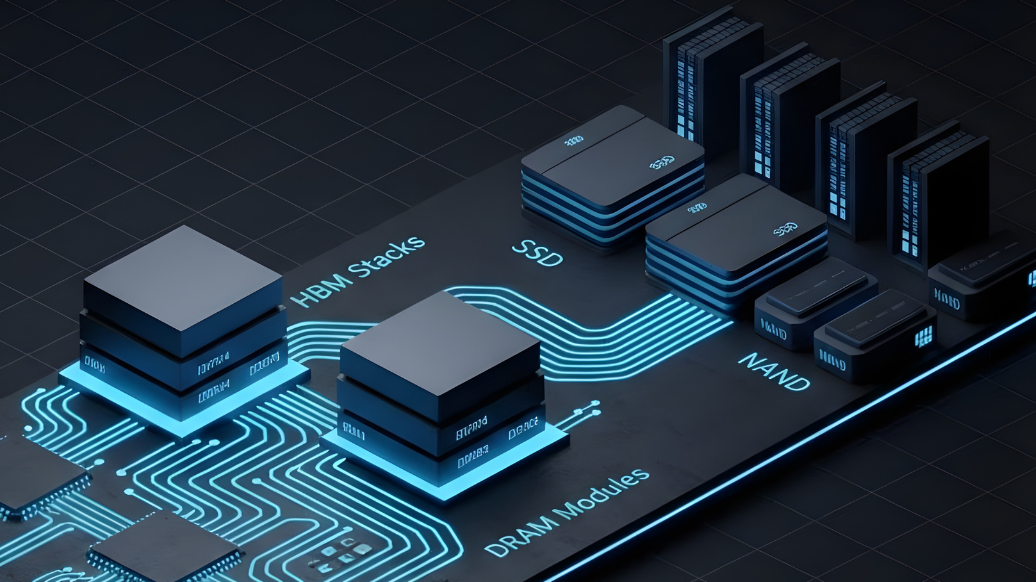

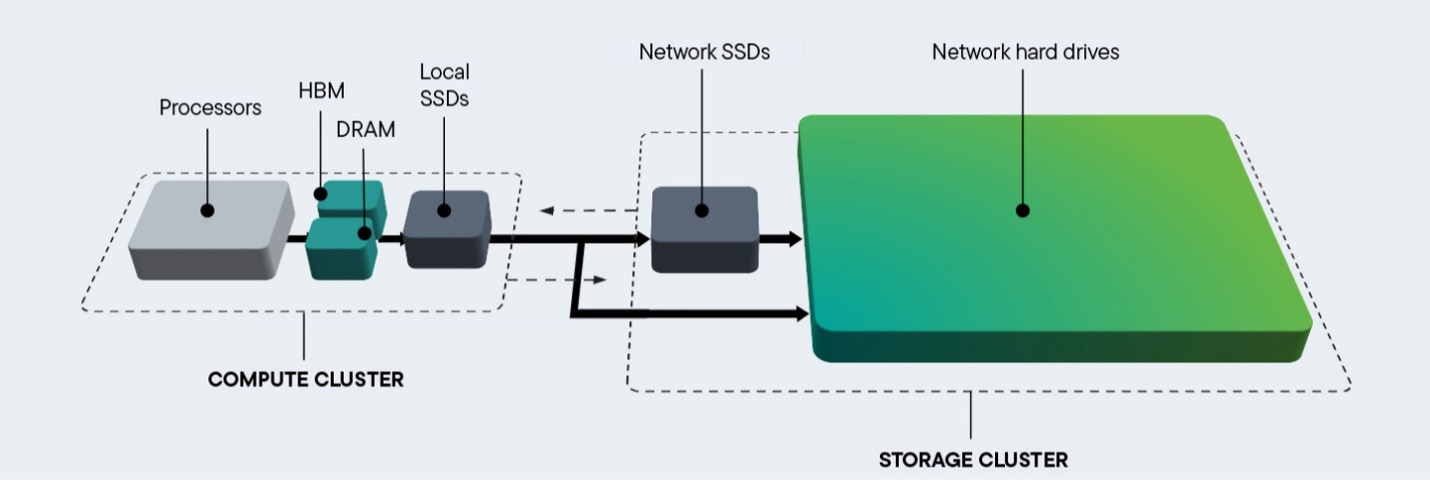

Figure: AI data interacts with a variety of storage and memory devices (source: Seagate)[/caption]

Figure: AI data interacts with a variety of storage and memory devices (source: Seagate)[/caption]

In practical terms, more memory is required to be closer to compute, and it must move faster and at scale. That reality places sustained pressure on the memory ecosystem well beyond accelerators alone. When those resources become critical to uptime and schedule, purchasing behavior changes. Customers commit earlier, hold more buffer inventory for hard-to-replace parts, and prioritize continuity over spot optimization.

Why is capacity constrained?

Capacity constraints today are less about a single chokepoint and more about product mix and transition timing.

For DRAM, suppliers are concentrating investment and output on products tied to long-term growth and profitability.

Two areas stand out: DDR5 for modern platforms and HBM for accelerators.

As capacity and engineering focus shift toward these segments, legacy DRAM receives less support, especially in DDR4. Even where DDR4 demand remains meaningful due to the installed base and longer qualification cycles, memory suppliers have clear incentives to reduce exposure to low-margin legacy parts and move the market forward. As a result, DDR4 is increasingly characterized by constrained availability, less pricing elasticity, and a higher risk of allocation.

For NAND, the conversation is more nuanced.

NAND wafer supply matters, but finished product availability often depends on additional components and manufacturing steps. Enterprise SSDs, for example, rely on controllers, substrates, PCBs, power loss protection components, and, in many cases, drive DRAM. If any of these elements become constrained, finished drive output tightens even if NAND supply is improving. Buyers sometimes underestimate this, assuming that a better NAND supply picture automatically translates to abundant SSD availability. Constraints can migrate across the bill of materials.

What memory makers are doing

Rather than expanding output aggressively at the first sign of recovery, memory suppliers are focusing on profitability, product mix, and long-term positioning. In practical terms, that means:

- Prioritizing higher value DRAM segments, particularly DDR5 and HBM.

- Exiting or de-emphasizing lower margin businesses to focus on higher value, AI-driven memory products.

- Using allocation frameworks that favor customers with credible forecasts and committed demand.

- Managing capital spending to avoid creating the kind of oversupply that would rapidly reset pricing.

For customers, this matters because it changes the rules of engagement. In a more managed market, availability can depend as much on planning and commitments as it does on willingness to pay in the moment.

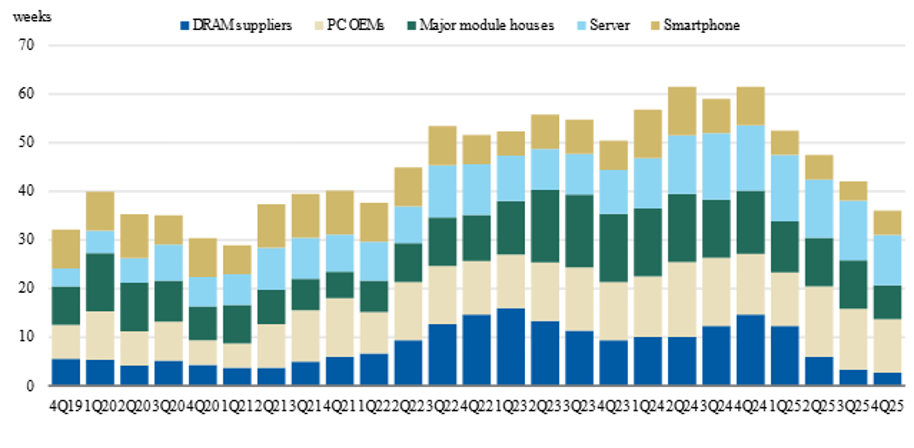

Inventory and why it is not a reliable shock absorber

Inventory conditions across the ecosystem have shifted. In recent years, many channels built inventory buffers during periods of uncertainty. As the market tightened and product transitions accelerated, those buffers have been drawn down. What remains is often unevenly distributed.

Some inventory is held by large OEMs, hyperscalers, or major module houses. Some are committed to specific platforms or customers. Some do not match current qualification requirements. The implication is that inventory can exist in the system while buyers still experience scarcity for the parts that matter to their builds.

[caption id="attachment_13248" align="alignnone" width="914"] Figure: DRAM Inventory Levels (source: Morgan Stanley Research and TrendForce, Jan 2026)[/caption]

Figure: DRAM Inventory Levels (source: Morgan Stanley Research and TrendForce, Jan 2026)[/caption]

This is particularly relevant for DDR4-dependent programs and for qualified enterprise storage configurations. Where substitution is difficult, buyers tend to secure coverage earlier and hold it longer. That behavior reduces the availability of flexible supply and amplifies short-term disruptions.

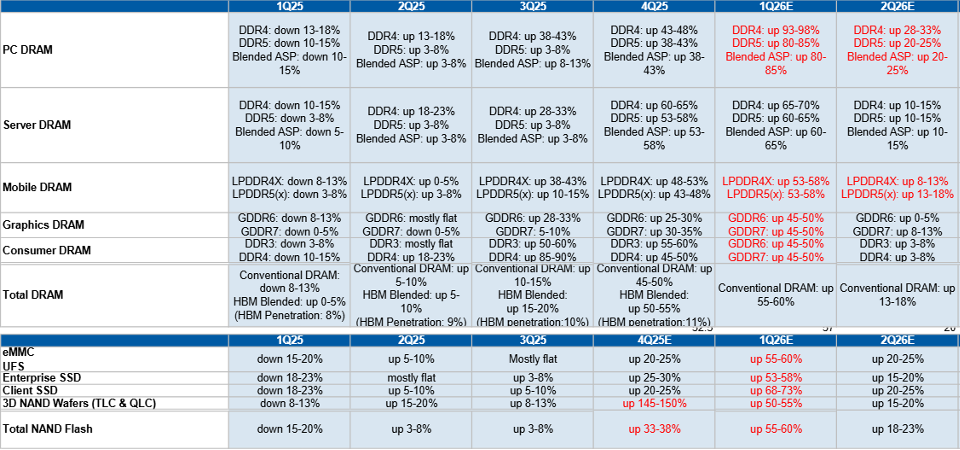

Implications for pricing and availability

The market implication is straightforward: the greatest pricing pressure and availability risk sit at the intersection of two factors, constrained supply and limited alternative options.

For DRAM, legacy products are exposed because supply is being deprioritized while demand persists. DDR4 is the clearest example. As production attention shifts to DDR5 and HBM, DDR4 tightness can emerge quickly, and pricing can move sharply because buyers have fewer near-term alternatives. In contrast, DDR5 continues to benefit from strong platform momentum, but pricing is still influenced by capacity being pulled toward HBM and by the pace of server buildouts.

For NAND and SSDs, availability and pricing are increasingly shaped by enterprise demand and by the full component stack behind finished drives. When enterprise storage demand is strong, upstream NAND pricing can firm, but the more immediate pain points for many buyers are finished drive allocation, extended lead times, and the need to secure the right configuration rather than any SSD at any price.

Overall, the market is signaling a period when volatility is more likely to show up as allocation events and step changes in quotes, not just gradual movement. Buyers should expect continued tightness in parts tied to AI infrastructure and in legacy products that suppliers are intentionally steering away from.

[caption id="attachment_13249" align="alignnone" width="960"] Figure: Memory and Storage Pricing Trends and Forecast for Q1’26 and Q2’26. Source: Morgan Stanley Research and TrendForce)[/caption]

Figure: Memory and Storage Pricing Trends and Forecast for Q1’26 and Q2’26. Source: Morgan Stanley Research and TrendForce)[/caption]

Where to go from here: Arrow’s recommendations

The most effective response is to treat memory and storage as schedule-critical inputs, not as late-stage commodities. The following actions consistently reduce risk:

Build a DDR4 continuity plan.

If you have platforms that require DDR4, define the coverage horizon now. Identify last-time-buy requirements, validate alternate sources where feasible, and avoid waiting until pricing dislocates or allocations tighten further. Where possible, align future designs and refresh cycles to DDR5 adoption so you are not anchored to a shrinking supply base.

Use forecasting as a supply tool.

In a managed market, credible demand visibility improves allocation outcomes. Share forecasts early, refresh them routinely, and align them to production schedules. Buyers who provide clean visibility tend to see better continuity than those who rely on ad hoc ordering.

Consider longer-term commitments for constrained items.

For parts that are hard to substitute or that sit in the most constrained segments, longer term agreements can stabilize supply and reduce surprises in price. The right structure depends on your risk tolerance and demand certainty, but the broader principle holds: committed demand is prioritized.

De-risk enterprise SSD programs by validating the full stack.

Treat SSD availability as a system outcome, not a NAND outcome. Validate controller availability, substrates, power loss protection components, and on-drive DRAM assumptions. Confirm that alternates are qualified or qualify them proactively. This is often where programs slip when teams focus only on NAND supply.

Segment your purchasing strategy by criticality.

Not every part deserves the same approach. For highly constrained or hard-to-replace parts, prioritize continuity and security of allocation. For more flexible items, staged buying tied to confirmed demand can manage working capital while reducing exposure to sudden price moves.

Budget with scenarios and triggers.

Instead of a single price assumption, build a range and define triggers for action. Pre-approve alternates and escalation paths so that procurement does not stall when quotes change quickly.

Closing perspective

This market is being reshaped by structural demand and disciplined supply behavior. The organizations that navigate it best will be those that plan earlier, qualify intelligently, and align procurement strategy to technology transitions.

Connect with our team today to learn how Arrow’s Intelligent Solutions can support your organization by translating market signals into practical allocation plans, qualification strategies, and sourcing structures that help protect schedules while managing cost and risk.

Unpacking NVIDIA GTC Paris 2025

June 2025

I’ve returned from NVIDIA’s GTC event in Paris. In just a matter of weeks, NVIDIA took over half of Porte de Versailles and transformed it into the epicenter of AI conversation. It was impressive not just in terms of scale but in how it laid out where this industry is heading. The fact that NVIDIA recognized the value of hosting a GTC event in Europe speaks volumes about the opportunities in the region.

In his keynote, CEO Jensen Huang outlined a vision touching on sovereign AI, industrial supercomputing, and agentic systems, as well as the convergence of AI and quantum technologies. He encouraged European enterprises not just to adopt AI but to build and run it locally, aligning with the EU’s broader ambitions to assert digital independence and develop homegrown AI infrastructure.

Let’s unpack a few of the highlights from the NVIDIA GTC Paris 2025 event:

The Spotlight on Agentic AI

One of Huang’s central themes was how we’ve moved from perception AI (sensors and computer vision) to generative AI (text, images, code) and now into agentic AI. Unlike traditional AI systems that passively respond to prompts, agentic AI can make decisions and take actions independently, transforming AI from a tool into a trusted digital collaborator.

NVIDIA introduced several key innovations in this space:

- NeMo Agent: a modular, task-driven framework for building autonomous AI agents that can plan and reason

- AI Blueprint: a production-ready framework for building, testing, and deploying agentic and generative AI workflows

- Agentic AI Safety Framework: a framework for ensuring autonomous AI systems operate safely, ethically, and reliably

These tools can enable organizations to build AI systems that automatically collect, analyze, and feed back data into models for ongoing improvement and refinement. There is a clear shift from co-pilots to AI systems that act with purpose, handle operational tasks, and learn in real time, opening the door to more autonomous customer service, operations, and engineering workflows.

The notable shift from NVIDIA in the agentic AI conversation was that modern AI infrastructure isn't just about compute, but about building intelligence pipelines that continuously fuel and evolve AI systems in a secure, scalable, and compliant manner.

Industrial AI: NVIDIA’s AI Factory Vision and The Indisputable Edge

Another major highlight was the introduction of AI Factories, or rather, next-gen data centers powered by NVIDIA's Blackwell GPUs and Omniverse tools. These facilities serve as the digital engines of Europe's AI ecosystem, supporting industries like automotive, manufacturing, and healthcare. NVIDIA showcased collaborations with some of Europe’s top automotive manufacturers and healthcare providers.

With Factory AI comes a renewed focus on edge computing as a foundational layer in NVIDIA’s broader vision. While centralized data centers orchestrate training and coordination, the edge performs real-world inference. It acts as the first point of contact for data collection and decision-making on factory floors, in vehicles, and across field equipment.

For Arrow customers, this signals an accelerating need for robust, deployable edge infrastructure that bridges physical operations and digital intelligence. As enterprises invest in AI-driven automation, edge platforms remain essential for Industrial AI.

AI and Quantum: A Converging Frontier

It's challenging to determine how close or distant the convergence of AI and quantum computing is; for some industries, this convergence is closer than for others. Nevertheless, we gained a glimpse of an early yet clear vision for integrating AI workloads and quantum technologies, enabling quantum processors to handle highly specialized computations while GPUs manage traditional workloads. These innovations are designed to speed the development of quantum error correction. With this news came the launch announcement of CUDA-Q on Blackwell systems and collaboration with Denmark’s Gefion supercomputer.

From Concept to Scale and Possibility to Practicality – AI with Arrow

We recognize that AI is rapidly advancing across industries, and yet, turning innovation into practical solutions remains a significant challenge.

Organizations face a growing array of options, including but not limited to NVIDIA. And while NVIDIA’s latest platforms unlock extraordinary capabilities, not every use case demands that level of investment. In many cases, modular, off-the-shelf edge solutions may be more appropriate and efficient.

That’s where Arrow comes in. Our team understands the full AI infrastructure stack and applies this expertise to help solution builders translate their IP into deployable, production-grade systems. Whether it’s choosing the right processor for an edge AI workload, integrating GPUs into a thermally constrained enclosure, or managing compliance testing and imaging, we handle the complex platform engineering that happens behind the scenes.

Whether you’re deploying agentic AI at scale or piloting smart edge devices, we work with you to deliver solutions that align with your goals, timelines, and investment strategy. Feel free to reach out to me or one of our experts to learn how we can help you advance your AI-driven technologies, powered by NVIDIA.

Sign up for the newsletter

Stay in the loop with the latest news, updates, and more from Arrow’s Intelligent Solutions. Sign up today for our free monthly newsletter.